Hi! My name is Phillip, and I am a rising junior at Amherst College majoring in math and biology! Broadly, I am interested in applied math, AI, and developmental biology. In particular, I want to explore how these subjects can improve our understanding of human health through developing products/treatments in industry or basic biology research in academia. At this point, I don’t have any set career plans yet and am potentially interested in going to graduate school for applied math or quantitative biological fields like bioinformatics.

This summer, I will be working at Cold Spring Harbor Laboratory (CSHL) in Long Island, New York with Dr. Peter Koo, Dr. David McCandlish, and Shushan Toneyan on research in quantitative biology. I think this was a really great opportunity for me to experience quantitative biology for the first time because I have not done so in the past. Previously, I have done research at Amherst in math and biology separately, but haven’t found opportunities to combine my interests in the two fields in a research setting before CSHL.

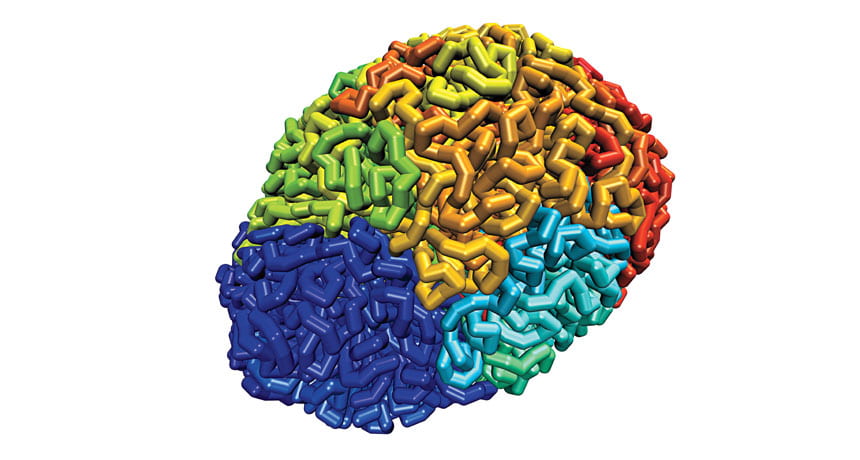

My research this summer deals with the application of neural networks to learning more about the human genome and its architecture. Neural networks are algorithms that, in broad terms, are trained to recognize patterns by learning from data they are given and interpreting new data to look for these patterns. They can be used on all sorts of datasets, including images (https://www.craiyon.com/, anyone?), and are quite useful in real life. Besides predicting protein folding patterns, allowing cars to drive themselves, and diagnosing diseases, neural networks can learn patterns from DNA. DNA, the molecule that contains much of the information that makes us, well, us, encodes information in the string of nucleotides that it is composed of. In recent years, scientists discovered that DNA is not found in disordered masses within cells, in fact, DNA has a very complex, multi-scale structure that is essential for proper gene regulation. (A simulation that attempts to capture DNA folding is the picture on this post!) Disruptions to the architecture of the genome can cause all sorts of diseases, from polydactyly to cancers. Proteins called transcription factors help maintain this architecture, and, recognizing specific strings of nucleotides called motifs in DNA, directly interact with and change the shape of strands of DNA in cells.

Neural networks can be fed data in the form of sequences of nucleotides (like ATACATGTGT) and once trained, predict how the genome will fold. However, a downside of certain neural networks is interpretability: neural networks learn patterns to make predictions that may not be obvious to us humans. A major goal of the Koo Lab is to address the question “What do neural networks actually learn?” We care about this question in the field of genomics because we want to investigate whether neural networks can, without human instruction, learn meaningful aspects of biology to make their predictions. I will be developing “in silico” (virtual) experiments to test if neural networks actually recognize transcription factor motifs to modify their predictions about genome folding.

Honestly, I was really surprised that I was offered my position because my background doesn’t exactly fit with the work I am doing this summer. While I have always been interested in learning more about bioinformatics, I have not taken any classes in the computer science department at Amherst. However, I feel that my current research does involve a lot of biology and it certainly is founded on math. The term “neural network” suggests that neural networks can think like living organisms. While it is true that the structure of neural networks is biologically inspired (they are composed of units called neurons), neural networks to me are universal function approximators: they try to optimize the fidelity of their predictions without “thinking” about the data they are given. There’s a lot of interesting math that underlies neural networks, including optimization, linear algebra, probability theory, and information theory.

I arrived at Cold Spring Harbor thinking I would be brushing the biology aside for quantitative reasoning. At this point in the program, I feel like understanding biology is absolutely essential to my project: really what my project deals with is learning about biology and doing experiments, but on a computer with previously collected data instead of in a wet lab. Thus, I feel like my experiences in math and biology have helped me tremendously for this project even though I have never worked on coding intensively or done biology outside of a wet lab before.

I’m really enjoying my summer so far. I feel like I am making steps towards attaining some of the goals that I have for myself during the length of the program, including getting to know people in the lab and my program, figuring out what good next steps are for my career, and learning more about quantitative biology. Hopefully I’ll have more to share at the end of the program, but I would highly recommend you try applying to this program if you are interested in studying quantitative biology, plant biology, neuroscience, cancer, or genetics. (Plus, Cold Spring Harbor has a train that goes directly to Penn Station, which makes CSHL great if you want to visit New York City on the weekends!) I’d be more than happy to talk about this experience more through email: feel free to contact me at pzhou24@amherst.edu if you have any other questions! Thank you very much for reading!